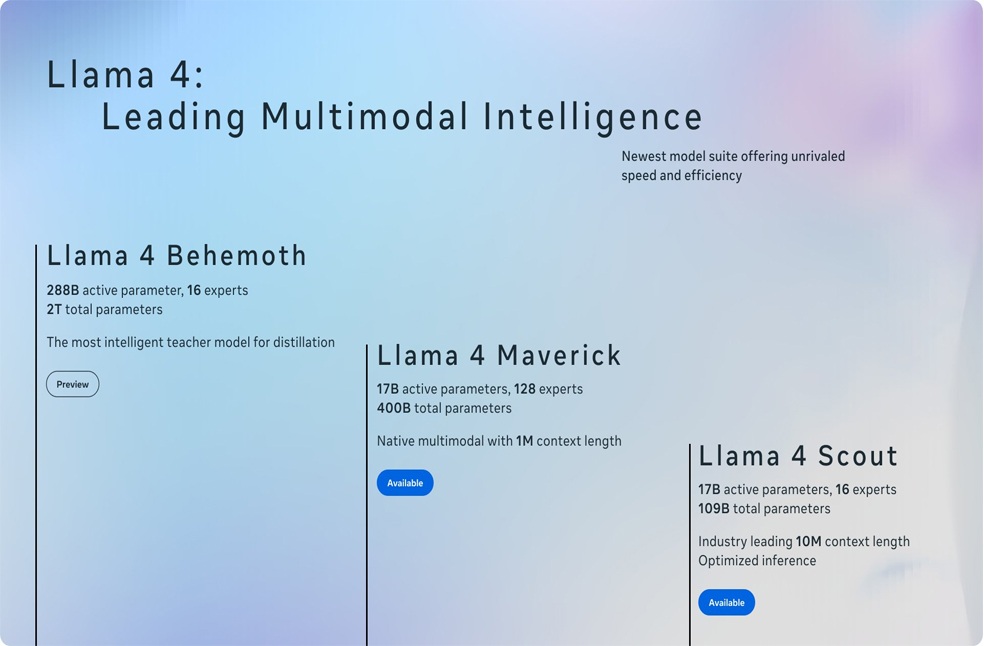

Menlo Park, California: Meta Platforms has officially rolled out its newest large language models (LLMs), Llama 4 Scout and Llama 4 Maverick, describing them as the company’s most sophisticated multimodal AI systems to date.

These models can process and convert multiple forms of data—including text, images, audio, and video—allowing for seamless cross-format interactions.

According to Meta, both models are now available as open-source software, reinforcing the company’s commitment to transparent AI development.

The tech giant also gave an early glimpse of another upcoming model, Llama 4 Behemoth, calling it one of the world’s most intelligent LLMs and the most powerful ever developed by Meta. This model is expected to serve as a mentor model for future AI training.

Despite the announcement, earlier reports revealed that Meta had delayed the release of Llama 4 due to performance concerns.

During development, the models reportedly fell short in specific technical benchmarks, particularly in reasoning and mathematics, and were less capable in humanlike voice interaction compared to competitors like OpenAI’s models.

Still, Meta remains fully committed to advancing its AI capabilities. The company plans to invest up to $65bn in 2025 to scale its AI infrastructure—part of a wider effort by major tech firms to deliver meaningful returns on artificial intelligence investments in an increasingly competitive space.